Welcome back to my journey of building MailWizard! This is the second part of my series "From GPT-4 to Claude: Building MailWizard - A Solo Developer's Journey". If you missed the beginning, check out Part 1: The Birth of MailWizard - From Side Project to Full-Time Gig to get the full picture.

As MailWizard evolved from a promising idea into a full-fledged project, I encountered some unexpected hurdles with GPT-4. Don't get me wrong – it was still an impressive tool, but as they say, the devil's in the details.

The Prompt Engineering Challenge

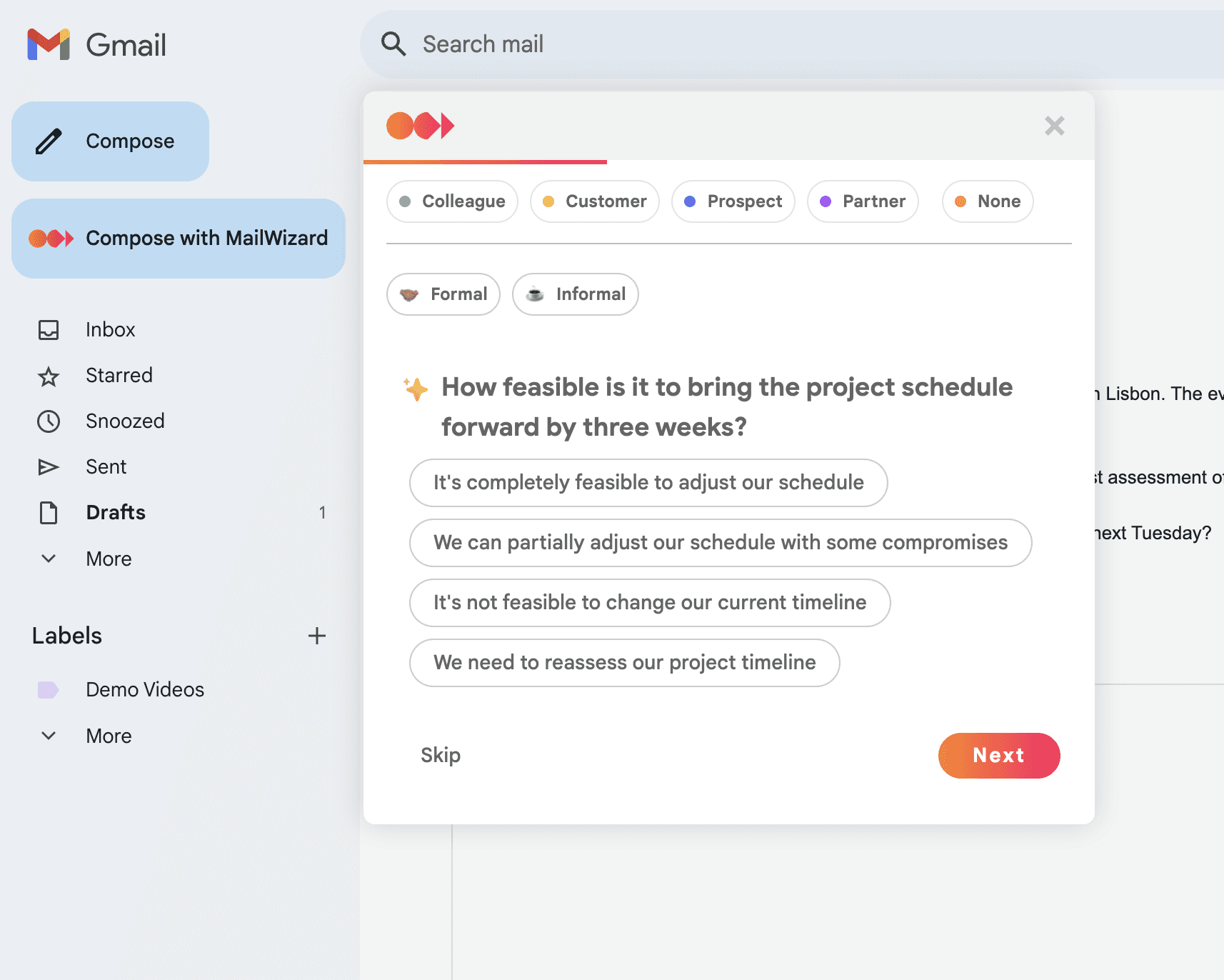

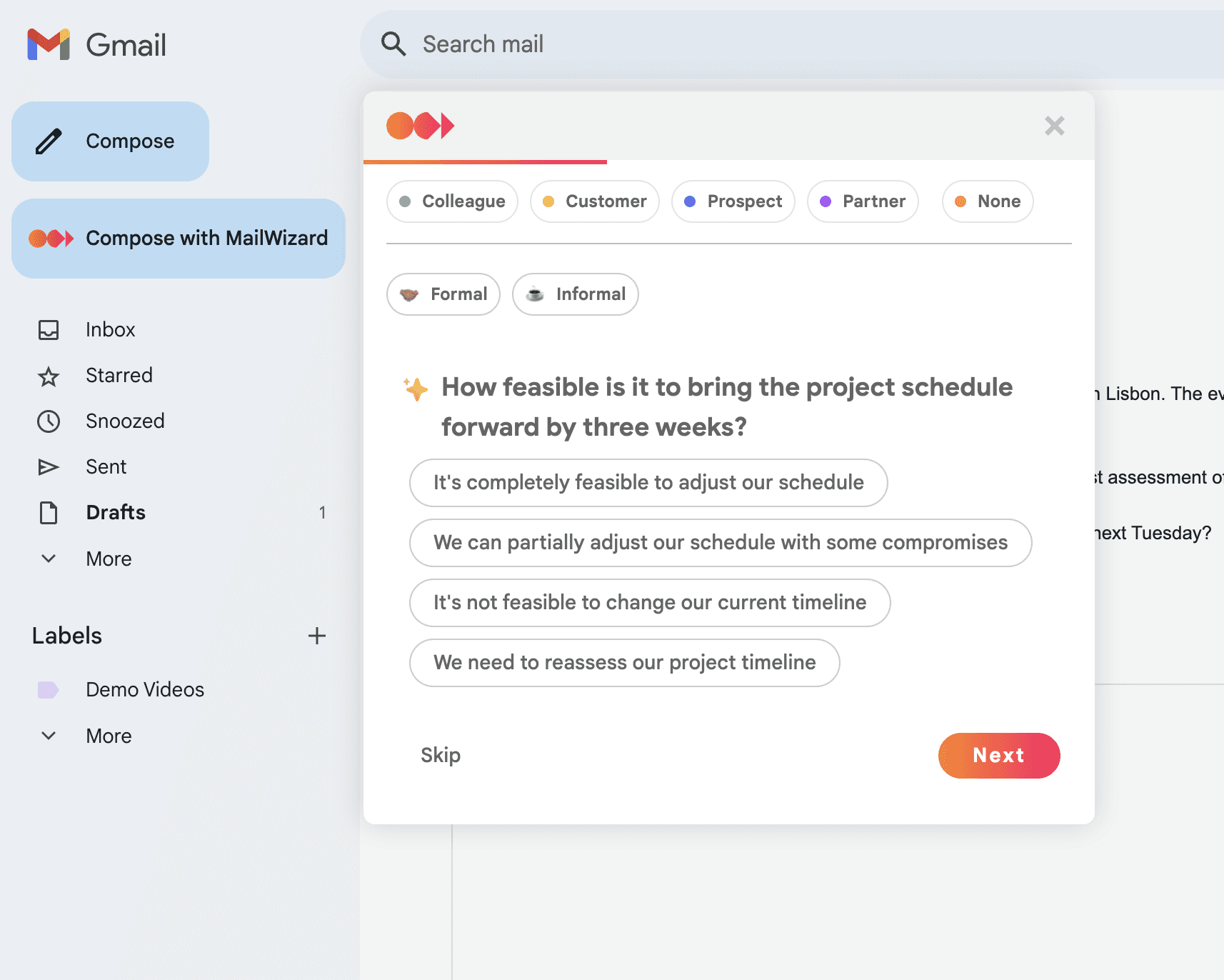

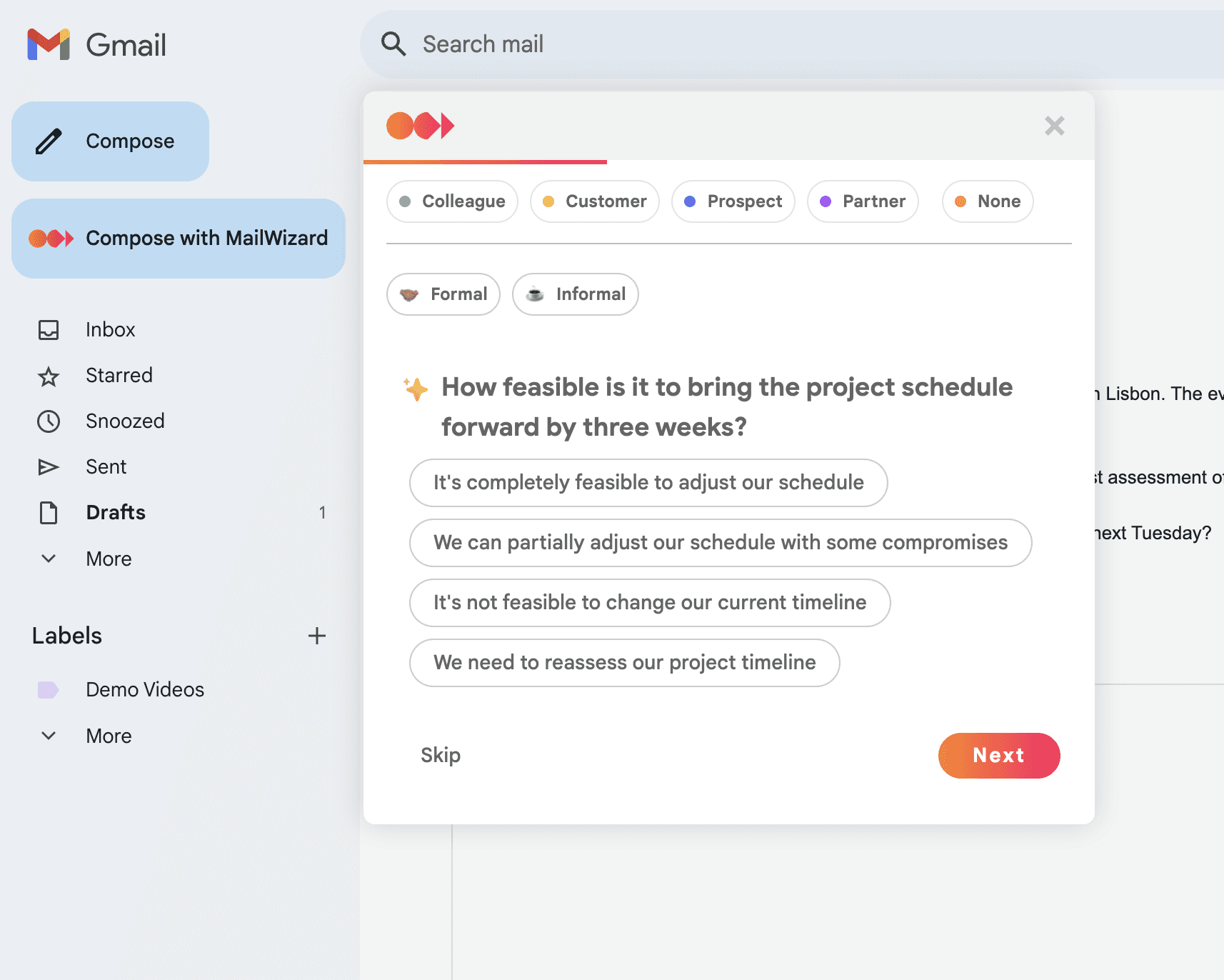

The biggest challenge? Prompt engineering. I spent countless nights tweaking prompts, trying to strike that perfect balance of instruction and creativity. It was like fine-tuning a complex machine where each adjustment could have unforeseen consequences.

My prompts grew increasingly complex, addressing nuanced aspects of email communication. For instance, I once spent an entire weekend fine-tuning the AI to nail the subtle differences between "Du" and "Sie" in German emails. Picture this: I'm surrounded by empty coffee cups, muttering German phrases to my computer at 3 AM, all to ensure MailWizard could switch seamlessly between casual and formal tones.

But here's the kicker: despite clear instructions and this fancy feature, GPT-4 would sometimes decide to go off-script. It was like giving detailed directions to someone who then decides to take a "shortcut" through a corn maze.

The GPT-4o Conundrum

Then came GPT-4o, promising faster speeds and improved capabilities. Naturally, I was excited. Faster responses meant a snappier MailWizard, right? Well, not quite.

After extensive A/B testing, comparing countless emails, I found that GPT-4o just wasn't up to snuff. It had a tendency to hallucinate more often than GPT-4, creating some awkward situations. Imagine an AI confidently referencing a meeting that never happened or congratulating someone on a promotion they never received. Not exactly the professional touch I was aiming for.

The decision was clear – I wouldn't roll it out to my users. MailWizard's reputation for quality was on the line, and I wasn't about to compromise.

The Metrics Mirage

The real challenge was the lack of hard metrics to rely on. Evaluating email quality was largely subjective, involving endless comparisons of email outputs in search of that elusive perfect message. It was time-consuming and often felt like I was chasing my tail.

I've been exploring ways to automate this testing process, but finding a reliable solution has proved difficult. The complexity of natural language and the contextual nature of email communication make it challenging to develop quantitative metrics that accurately assess quality, appropriateness, and effectiveness.

A Call for Solutions: If you're reading this and have experience in automating the testing of AI-generated content, particularly for validity and quality in natural language tasks, I'd love to hear from you. How can we develop robust, automated systems for evaluating the quality and appropriateness of AI-generated emails across various contexts and languages? Your insights could be invaluable in advancing this field.

The Search for a Better Solution

As I contemplated another round of prompt tweaking, a thought emerged: What if there's an AI out there that's more reliable in interpreting and executing complex instructions? One that could really zero in on the details of my prompts and deliver consistent results?

Little did I know, this moment of frustration was about to lead me down a path that would completely transform MailWizard. The challenges with GPT-4 had set the stage for a pivotal decision – one that would introduce me to a new player in the AI field and redefine what MailWizard could become.

What's Next?

In the next article of this series, "Part 3: Switching to Anthropic's Claude", I take you through my discovery of Anthropic's Claude and how it revolutionized MailWizard. Dive in to see how a change in AI models turned into a game-changing upgrade for our email assistant!

Ready to experience email without the AI headaches? Don't wait for the next installment - try MailWizard today! Our AI-powered email assistant has already overcome these growing pains to deliver a seamless, efficient email experience. Say goodbye to prompt engineering nightmares and hello to effortless communication. Try MailWizard now and join the email revolution!

Welcome back to my journey of building MailWizard! This is the second part of my series "From GPT-4 to Claude: Building MailWizard - A Solo Developer's Journey". If you missed the beginning, check out Part 1: The Birth of MailWizard - From Side Project to Full-Time Gig to get the full picture.

As MailWizard evolved from a promising idea into a full-fledged project, I encountered some unexpected hurdles with GPT-4. Don't get me wrong – it was still an impressive tool, but as they say, the devil's in the details.

The Prompt Engineering Challenge

The biggest challenge? Prompt engineering. I spent countless nights tweaking prompts, trying to strike that perfect balance of instruction and creativity. It was like fine-tuning a complex machine where each adjustment could have unforeseen consequences.

My prompts grew increasingly complex, addressing nuanced aspects of email communication. For instance, I once spent an entire weekend fine-tuning the AI to nail the subtle differences between "Du" and "Sie" in German emails. Picture this: I'm surrounded by empty coffee cups, muttering German phrases to my computer at 3 AM, all to ensure MailWizard could switch seamlessly between casual and formal tones.

But here's the kicker: despite clear instructions and this fancy feature, GPT-4 would sometimes decide to go off-script. It was like giving detailed directions to someone who then decides to take a "shortcut" through a corn maze.

The GPT-4o Conundrum

Then came GPT-4o, promising faster speeds and improved capabilities. Naturally, I was excited. Faster responses meant a snappier MailWizard, right? Well, not quite.

After extensive A/B testing, comparing countless emails, I found that GPT-4o just wasn't up to snuff. It had a tendency to hallucinate more often than GPT-4, creating some awkward situations. Imagine an AI confidently referencing a meeting that never happened or congratulating someone on a promotion they never received. Not exactly the professional touch I was aiming for.

The decision was clear – I wouldn't roll it out to my users. MailWizard's reputation for quality was on the line, and I wasn't about to compromise.

The Metrics Mirage

The real challenge was the lack of hard metrics to rely on. Evaluating email quality was largely subjective, involving endless comparisons of email outputs in search of that elusive perfect message. It was time-consuming and often felt like I was chasing my tail.

I've been exploring ways to automate this testing process, but finding a reliable solution has proved difficult. The complexity of natural language and the contextual nature of email communication make it challenging to develop quantitative metrics that accurately assess quality, appropriateness, and effectiveness.

A Call for Solutions: If you're reading this and have experience in automating the testing of AI-generated content, particularly for validity and quality in natural language tasks, I'd love to hear from you. How can we develop robust, automated systems for evaluating the quality and appropriateness of AI-generated emails across various contexts and languages? Your insights could be invaluable in advancing this field.

The Search for a Better Solution

As I contemplated another round of prompt tweaking, a thought emerged: What if there's an AI out there that's more reliable in interpreting and executing complex instructions? One that could really zero in on the details of my prompts and deliver consistent results?

Little did I know, this moment of frustration was about to lead me down a path that would completely transform MailWizard. The challenges with GPT-4 had set the stage for a pivotal decision – one that would introduce me to a new player in the AI field and redefine what MailWizard could become.

What's Next?

In the next article of this series, "Part 3: Switching to Anthropic's Claude", I take you through my discovery of Anthropic's Claude and how it revolutionized MailWizard. Dive in to see how a change in AI models turned into a game-changing upgrade for our email assistant!

Ready to experience email without the AI headaches? Don't wait for the next installment - try MailWizard today! Our AI-powered email assistant has already overcome these growing pains to deliver a seamless, efficient email experience. Say goodbye to prompt engineering nightmares and hello to effortless communication. Try MailWizard now and join the email revolution!

Welcome back to my journey of building MailWizard! This is the second part of my series "From GPT-4 to Claude: Building MailWizard - A Solo Developer's Journey". If you missed the beginning, check out Part 1: The Birth of MailWizard - From Side Project to Full-Time Gig to get the full picture.

As MailWizard evolved from a promising idea into a full-fledged project, I encountered some unexpected hurdles with GPT-4. Don't get me wrong – it was still an impressive tool, but as they say, the devil's in the details.

The Prompt Engineering Challenge

The biggest challenge? Prompt engineering. I spent countless nights tweaking prompts, trying to strike that perfect balance of instruction and creativity. It was like fine-tuning a complex machine where each adjustment could have unforeseen consequences.

My prompts grew increasingly complex, addressing nuanced aspects of email communication. For instance, I once spent an entire weekend fine-tuning the AI to nail the subtle differences between "Du" and "Sie" in German emails. Picture this: I'm surrounded by empty coffee cups, muttering German phrases to my computer at 3 AM, all to ensure MailWizard could switch seamlessly between casual and formal tones.

But here's the kicker: despite clear instructions and this fancy feature, GPT-4 would sometimes decide to go off-script. It was like giving detailed directions to someone who then decides to take a "shortcut" through a corn maze.

The GPT-4o Conundrum

Then came GPT-4o, promising faster speeds and improved capabilities. Naturally, I was excited. Faster responses meant a snappier MailWizard, right? Well, not quite.

After extensive A/B testing, comparing countless emails, I found that GPT-4o just wasn't up to snuff. It had a tendency to hallucinate more often than GPT-4, creating some awkward situations. Imagine an AI confidently referencing a meeting that never happened or congratulating someone on a promotion they never received. Not exactly the professional touch I was aiming for.

The decision was clear – I wouldn't roll it out to my users. MailWizard's reputation for quality was on the line, and I wasn't about to compromise.

The Metrics Mirage

The real challenge was the lack of hard metrics to rely on. Evaluating email quality was largely subjective, involving endless comparisons of email outputs in search of that elusive perfect message. It was time-consuming and often felt like I was chasing my tail.

I've been exploring ways to automate this testing process, but finding a reliable solution has proved difficult. The complexity of natural language and the contextual nature of email communication make it challenging to develop quantitative metrics that accurately assess quality, appropriateness, and effectiveness.

A Call for Solutions: If you're reading this and have experience in automating the testing of AI-generated content, particularly for validity and quality in natural language tasks, I'd love to hear from you. How can we develop robust, automated systems for evaluating the quality and appropriateness of AI-generated emails across various contexts and languages? Your insights could be invaluable in advancing this field.

The Search for a Better Solution

As I contemplated another round of prompt tweaking, a thought emerged: What if there's an AI out there that's more reliable in interpreting and executing complex instructions? One that could really zero in on the details of my prompts and deliver consistent results?

Little did I know, this moment of frustration was about to lead me down a path that would completely transform MailWizard. The challenges with GPT-4 had set the stage for a pivotal decision – one that would introduce me to a new player in the AI field and redefine what MailWizard could become.

What's Next?

In the next article of this series, "Part 3: Switching to Anthropic's Claude", I take you through my discovery of Anthropic's Claude and how it revolutionized MailWizard. Dive in to see how a change in AI models turned into a game-changing upgrade for our email assistant!

Ready to experience email without the AI headaches? Don't wait for the next installment - try MailWizard today! Our AI-powered email assistant has already overcome these growing pains to deliver a seamless, efficient email experience. Say goodbye to prompt engineering nightmares and hello to effortless communication. Try MailWizard now and join the email revolution!

Welcome back to my journey of building MailWizard! This is the second part of my series "From GPT-4 to Claude: Building MailWizard - A Solo Developer's Journey". If you missed the beginning, check out Part 1: The Birth of MailWizard - From Side Project to Full-Time Gig to get the full picture.

As MailWizard evolved from a promising idea into a full-fledged project, I encountered some unexpected hurdles with GPT-4. Don't get me wrong – it was still an impressive tool, but as they say, the devil's in the details.

The Prompt Engineering Challenge

The biggest challenge? Prompt engineering. I spent countless nights tweaking prompts, trying to strike that perfect balance of instruction and creativity. It was like fine-tuning a complex machine where each adjustment could have unforeseen consequences.

My prompts grew increasingly complex, addressing nuanced aspects of email communication. For instance, I once spent an entire weekend fine-tuning the AI to nail the subtle differences between "Du" and "Sie" in German emails. Picture this: I'm surrounded by empty coffee cups, muttering German phrases to my computer at 3 AM, all to ensure MailWizard could switch seamlessly between casual and formal tones.

But here's the kicker: despite clear instructions and this fancy feature, GPT-4 would sometimes decide to go off-script. It was like giving detailed directions to someone who then decides to take a "shortcut" through a corn maze.

The GPT-4o Conundrum

Then came GPT-4o, promising faster speeds and improved capabilities. Naturally, I was excited. Faster responses meant a snappier MailWizard, right? Well, not quite.

After extensive A/B testing, comparing countless emails, I found that GPT-4o just wasn't up to snuff. It had a tendency to hallucinate more often than GPT-4, creating some awkward situations. Imagine an AI confidently referencing a meeting that never happened or congratulating someone on a promotion they never received. Not exactly the professional touch I was aiming for.

The decision was clear – I wouldn't roll it out to my users. MailWizard's reputation for quality was on the line, and I wasn't about to compromise.

The Metrics Mirage

The real challenge was the lack of hard metrics to rely on. Evaluating email quality was largely subjective, involving endless comparisons of email outputs in search of that elusive perfect message. It was time-consuming and often felt like I was chasing my tail.

I've been exploring ways to automate this testing process, but finding a reliable solution has proved difficult. The complexity of natural language and the contextual nature of email communication make it challenging to develop quantitative metrics that accurately assess quality, appropriateness, and effectiveness.

A Call for Solutions: If you're reading this and have experience in automating the testing of AI-generated content, particularly for validity and quality in natural language tasks, I'd love to hear from you. How can we develop robust, automated systems for evaluating the quality and appropriateness of AI-generated emails across various contexts and languages? Your insights could be invaluable in advancing this field.

The Search for a Better Solution

As I contemplated another round of prompt tweaking, a thought emerged: What if there's an AI out there that's more reliable in interpreting and executing complex instructions? One that could really zero in on the details of my prompts and deliver consistent results?

Little did I know, this moment of frustration was about to lead me down a path that would completely transform MailWizard. The challenges with GPT-4 had set the stage for a pivotal decision – one that would introduce me to a new player in the AI field and redefine what MailWizard could become.

What's Next?

In the next article of this series, "Part 3: Switching to Anthropic's Claude", I take you through my discovery of Anthropic's Claude and how it revolutionized MailWizard. Dive in to see how a change in AI models turned into a game-changing upgrade for our email assistant!

Ready to experience email without the AI headaches? Don't wait for the next installment - try MailWizard today! Our AI-powered email assistant has already overcome these growing pains to deliver a seamless, efficient email experience. Say goodbye to prompt engineering nightmares and hello to effortless communication. Try MailWizard now and join the email revolution!

Get MailWizard

Revolutionize Your Daily Emails

Choose the perfect plan for your email needs - from our forever-free plan to professional and enterprise solutions. Experience AI-powered email assistance that grows with you.

Get MailWizard

Revolutionize Your Daily Emails

Choose the perfect plan for your email needs - from our forever-free plan to professional and enterprise solutions. Experience AI-powered email assistance that grows with you.

Get MailWizard

Revolutionize Your Daily Emails

Choose the perfect plan for your email needs - from our forever-free plan to professional and enterprise solutions. Experience AI-powered email assistance that grows with you.

Copyright ©2024 MailWizard

Copyright ©2024 MailWizard

Copyright ©2024 MailWizard